It's been a while since I posted anything, it's been a funny month. Work end-of-quarter things (yes, I know the date), personal and work travel, and then following Brexit and its effects it was just so depressing to even write anything. Fortunately on that front there are plenty of far better writers than I arguing with the same kind of intensity I like to see - I'd recommend Alistair Campbell as someone to follow there. I started playing Fallout 4, and there went a couple of hours a night (just one more mission...), but I was also spending some time researching and building my new home office PC. Now for those of you looking for business or startup commentary, stop reading here, because the rest of this post is about building a PC. No kidding.

My current PC is getting a bit old, nearly 5 years. I've gone that length of time between building brand new systems before, but in those cases they have been slowly evolving, single parts changed at a time, until usually by the end it's nothing but the case that remains from the start. In this situation though, it's basically been the same hardware the whole time. It seems to be a sign of the maturing of the industry that the refresh on computer hardware is now moving to more than 3 years, when it used to be that 18 months was enough you felt you were falling behind. Mobile is now where the rapid obsolesence is, though even there the cycle time is slowing and with some phones becoming modular, we could see a similar shift in patterns and move to upgrades rather than new purchases.

My home use involves a mix of the usual web browsing, email, and docs/spreadsheets, but also some work in numerical simulation, CAD, and visualization, and it was in those latter three that I was really noticing the age of my system. That old system is going to get repurposed as a basic work machine, or maybe a Plex server for the house - even at 5 years old it's still pretty good. The Sandy Bridge chipset was a solid base, an i7 CPU at 3.4GHz, I had one of the early SSDs in it, and a 6 series nVidia graphics card. So where was it falling down?

Interestingly it was not really in the CPU that the problem lay - the new CPU is 4GHz compared to the 3.4 of the old, both are 4 core with hyperthreading, even with the new IPC improvements you get in each generation, you're looking at only a ~25% improvement in raw power. Pretty much I could have stuck with the old CPU and not noticed too much difference, however it's in all the other parts that I wanted an improvement.

Visualisation needs a good graphics card, CAD seems to eat up any improvements to power (similar to the old "What Intel giveth, Microsoft taketh away"), and the 6 series nVidia card was just not cutting it anymore. Further, GPU computing is really where leaps are being made now, not in CPU, and there have been major improvements here over the last few generations (not just scientific computing, but machine learning/AI), with multiple new architectures. nVidia just released their 10 series with the Pascal architecture, so for the GPU computing and to be working with the newer capabilities, I got one of their 1070 cards (I wanted a 1080, but paying nearly double for about 30% better performance just wasn't going to happen). To get that card I needed a motherboard that could support PCIe 3.0 16x lanes, slots/PCIe lanes for future GPU SLI (a second graphics card in parallel), and a PSU that could deliver all that power - my current system couldn't do that, and any upgrades would have been as expensive, and likely less effective, than going new.

I'm also fedup waiting for large datasets to save and load to disk. While I was using a Samsung 850 SSD, read and write speeds are around 400 MB/s which is way better than HDD (around 100 MB/s), it still gets annoying when you deal with 10GB of data writing to and from the disk. They also connect through SATA III and so are limited to 6Gbps. Fortunately the newer NVMe M2 SSDs now read at up to about 2.5GBps and read at 1.5GBps (yes B for 'bytes' not b for 'bits'), and because they connect through PCIe, a 4x lane 3.0 setup will in theory limit at around 32 Gbps. So a new M2 SSD will at most use around ~35 to 60% of the available bandwidth and not be limited.

Intel's Z170 chipset seemed to be the right choice, with up to 40 PCIe 3.0 lanes (20 chipset, 20 CPU), and support for USB 3.0 and RAID. Looking at the various motherboards that used it, I liked what I'd been seeing and hearing about ASRock, and so looked at their Extreme7+ board. It had all the features I needed for now with room for expansion, an additional USB 3.1 port (doubles the transfer speed), Thunderbolt, boot from NVMe, and interestingly not one but three M2 sockets for SSDs. Anandtech had a good review of it where I saw this RAID option, but didn't cover how well it worked, so now I started wondering if I could put multiple M2 SSDs in a RAID0 volume and triple the bandwidth?

Digging deeper, there was nothing that said that it wouldn't work, though also nothing concrete showing that it would. The 4x PCIe lanes are shared, so it would be a 32Gbps limit, likely less than that, and even if more lanes were available it all goes through the DMI which would bottleneck it at <32Gbps (though shared with anything else talking to the CPU so definitively lower than that). Three Samsung 950 Pro SSDs (the 512 GB ones, not the 256 GB, they have 1.5GBps and 0.9 GBps write speed respectively) would theoretically be up to 7.5 GBps read (60Gbps) and 4.5GBps write (36Gbps), while two would be 5 GBps read (40 Gbps) and 3 GBps write (24 Gbps) - so three SSDs would saturate the available bandwidth, two kinda straddles the limit. Given my budget, I decided to go with two of them as three probably would see the full gain, plus it was a risk in doing something I didn't know would work, and with two at worst I'd have a second fast storage drive. So I ordered the parts and built my first PC of the decade.

Motherboard: ASRock Z170 Extreme7+

CPU: Intel i7-6700K 4GHz

GFX: ZOTAC GTX 1070 AMP!

SSD: Samsung 950 Pro PCIe3.0x4 512GB x2

HDD: WD Black 5TB

Memory: 2x16GB PC3400 G.SKILL

PSU: EVGA SuperNOVA 650W Platinum, Modular

Case: Phanteks Enthoo Pro PH-ES614PBK

Fan: CoolerMaster 212 EVO

DVD/CD/BD Drive: LG Black WH16NS40

A few notes on the parts. I made sure not to go with a Founder's Edition of the GFX card, you pay more for lesser performance (yep). Memory matched from the motherboard manufacturers recommended page, and PSU large enough to handle future disk and GFX expansion. The Phanteks case had good reviews, and while larger than my existing case seemed to be designed for good cable management and ease of access when building (which it really was). And finally an anti-static wrist strap so I don't zap any of my new expensive components while handling them. I ordered from NewEgg - I looked at Amazon but the GFX card wasn't in stock until August.

All the manufacturers did a nice job of packaging their products, they put the effort in with presentation and little extras to make them look like premier suppliers, and it worked. I'm too used to just getting brown boxes with nothing but the OEM part like the WD Disk in the middle there. Putting it together was really pretty straightforward, these days all the cables seem to nicely labelled, and I only had to look at the MB manual to see the pin orientation for the power on and reset buttons.

One thing I did get caught with - the graphics card had two 8 pin power connectors (GFX cards draw more than the 75W allowed by PCIe so need additional), while each of the two VGA PSU connectors had an 8 (6+2) and a 6 pin connector. I hadn't expected that, so I ended up connecting to the one GFX card from the two PSU outlets. This is fine for now, but should I later put in another GFX card in SLI, I won't have the power connector. The manufacturer lists it as a 220W card so two 6 pins should be enough, and a 6 and an 8 should be more than enough (300W). Two full 8 pin power connectors for the GFX card seems excessive, so I don't know if it's really needed or not - more investigation needed here.

The M2 slots for the SSDs are on the motherboard, it's not the old world of running a SATA (or IDE) cable to them anymore.They sit parallel with the motherboard itself, inbetween the PCIe slots. I chose to put my two SSDs in slots 1 and 3 as slot 2 was directly under the graphics card and I wanted both easier access and no possible heating issues. I was concerned I might have to move them if the RAID option didn't support skipping a slot, but it worked out fine. Use of the M2 slots does come at a cost - some of the SATA ports get disabled for each one used. By leaving M2_2 unused, that meant I had SATA3_2, 3_3, and EXP1 free, all others were disabled. This was fine, I only had the Bluray drive and the single HDD, but if you're planning on doing this and having multiple extra HDD's, then plan on using the ASMedia chip SATAIII ports (4 of them) or a PCIe add-in card.

As a side note, it's interesting now how small the storage has become, while the graphics cards are enormous (basically it looks to be almost all cooling that takes up the bulk of the volume, same as with the CPU/cooler combo).

Once it was all put together, I powered it up. Fans spun, a few lights flashed, but nothing else. The nice little LEDs on the motherboard reported error 53, which indicated a memory issue. I checked the memory was in the right slots (A2 and B2 according to the manual), then reseated it. This time the system POSTed and I got to the BIOS, which said I had only one memory DIMM. Power off, reseat the other one again, and restart. This time all memory was seen. And that was it for fiddling with connectors - so far all the USB ports, disks, sound etc have all worked fine, so nicely done to the various manufacturers for making the labeling that good.

Now came the fun part - it is fun, even though you curse it at the time - where I spent near 6 hours trying to get the system to see the SSDs in RAID. I got there eventually but it was a bit of a tortuous route, hopefully what I write here will remind me what I did if I have to do this again, and save someone else the headache of doing it themselves if they find this. First, make sure you have a spare USB drive and another PC to work with as you jump back and forth troubleshooting.

My motherboard was on BIOS v2.0, latest is 3.0 (as of July 16), so first of all flash the BIOS. You can get the latest for this board here, and it not only updates the board BIOS but the Intel Rapid Storage Technology firmware too which is a key part. Put it on the thumb drive, then in the BIOS go to the 'Tools' section and there's a utility to flash. It does both the Intel Management Engine and the BIOS. Reboot and then it should be able to do the next needed steps for putting the SSDs into a bootable RAID.

In the Advanced version of the BIOS, go to the Storage Configuration option. Set 'SATA Mode Selection' to 'RAID', 'OpROM Policy' to 'UEFI Only', and Enable the relevant M2_x slots. I saved and exited at this point (F10), though I don't think you need to, but I was overdoing that to be sure that wasn't the problem.

Then to the Boot menu and scroll all the way down, there a 'CSM(Compatibility Support Module)' entry, go in there and change everything to 'UEFI Only'.

Then back to the 'Advanced' tab, and now into the 'Intel Rapid Store Technology' option, which should list your SSDs and the option to 'Create Volume'. Click that.

Then name your volume, select the disks (X) and it should default to the maximum size available. You have RAID0 (stripe) and RAID1(Mirror) options. Mirror buys no performance or storage gain, but heavy redundancy, RAID0 offers no redundancy (slightly increased chance of things going to hell as when one of the two SSDs dies, that's it for everything), but increased performance and storage size. I went with RAID 0. Click 'Create Volume' and it should be done. I left the strip size at 16kb, what limited information I could find showed that it was not a concern for performance.

Now back to Boot tab, and set the primary boot to UEFI version of whatever you want to boot from, in my case the Bluray drive. At this point I did *not* see the M2 RAID array as available as an option in the BIOS which had me fooled for a bit. Pop in the installation media, and save/restart.

From here I'm going to talk only about Windows10 installation, I didn't do Linux yet so can't make comment. Once it restarts, you should get the 'press any key to boot from DVD' message, do so and the standard Windows installation begins. Walk through as normal until you get to the "Where to install" option - where you will most likely not see the RAID array as an option. Instead, use the option to browse media for drivers, and have the appropriate ones loaded onto the USB thumbdrive. This part caught me out for quite some time - the drivers are here (for Win10 64 bit), it took me a while to work out which were the right ones (SATA Floppy and Intel RST), but every time I tried to use them it would find them, work for a while, then refuse to install. Eventually I noticed that the drivers on Intel's own site, here, were v14.8 compared to v14.5 on ASRock's site. Once I was using the latest Intel RAID drivers with the latest BIOS, then everything went smoothly with the Win10 install.

When installation was complete, I then also had the LAN drivers from ASRock's site on the USB and updated them before connecting to my network. I use wired, the house is wired with Cat5e so I get gigabit and it's much more reliable than WiFi, which I leave for truly mobile devices. Similarly, I had the latest nVidia drivers and sound drivers and updated them, before connecting to the network and downloading updates.

Last steps were then to take a couple of restore points (before and after AV installation), and create a recovery USB drive - while I do have original Win10 media, a recovery drive is kinda handy to have for when things go wrong. I then partitioned the HDD to include a 1 GB section that I'm going to use to regularly backup the contents of the M2 SSD RAID array - remember it's RAID0 so vulnerable to total data loss should one of them fail. This is some backup, but not ideal - however backup should really be on a different system, so for now it will also go to an external NAS (until I build a ZFS NAS, but that's another project for later in the year). So consider yourself warned if you do this that your data is at risk!

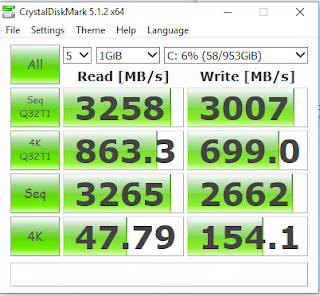

So how did it perform? Below is a CrystalDiskMark disk result:

You can see it's topping out near 3GB/s which is what I was expecting, and seemed to point to DMI as the limiting factor. I'll be running more tests later and seeing if I can glean more details. Anecdotely however, this thing is really fast and smooth, large files just go to and from the disk like they were tiny - which isn't surprising when I just had a near 10x improvement in disk bandwidth!

And as to the graphics, well I loaded Fallout 4, set it to native 1440 instead of 1080, turned everything to the max, and it handled it very smoothly - there's a noticeable improvement in quality and experience, so probably a near 10x improvement there too, image below. I have no idea, though, how that pack brahmin got up on the roof...

Update Oct 29th 2017: The Windows 10 "Creator's Fall Update" caused me no end of issues installing. Blue Screens of Death (BSOD) every time. The first was "CLOCK_WATCHDOG_TIMEOUT", which apparently, or so Microsoft tells me, is due to me overclocking my CPU. Except I'm not. I tried a couple of things, first of all updating the motherboard BIOS to the latest, which had no effect, then updating drivers. I had a ton of them to update, and I eventually gave up doing it manually and went with the paid up version of "Driver Easy", which I know I can do it all for free myself, I'm just at that point my time is worth more. That did the trick and cured the watchdog timer, except now I got a different BSOD, this time "SYSTEM_SERVICE_EXCEPTION". It took me a few days, but in the end it turns out that this update has an issue with NVMe boot disks, especially the Samsung 950 Pro - the installer is looking everywhere but there during the reboot, can't find a disk, and so fails. Solution - in the BIOS Boot section, disable all options other than "Windows Boot Manager" and also physically disconnect all other drives (in my case the DVD drive, the HDD, and a USB memory stick I had in there). Maybe just one of those actions would have worked, I did them both. And everything installed first time.

So thanks, Microsoft, for making this a giant pain to install. Oh, and with the Windows 10 Creator's Update, it looks like all privacy settings are reset to the default "Let everything access everything" level - type "Privacy" in the search bar in the bottom left and reset them to your preferred level (mine is "nothing"). So screw you MS for that. Oh, and Paint was deleted, now it's "Paint 3D". Screw you for that too.